TheStateOfGarnet2025

Garnet is my attempt at making a programming language that could be summed up as “What if Rust was simple?” The goal is to make a small, borrow-checked language aimed at lower-level programming, designed for portability and robustness.

Previously:

Last updated Jan 2025.

What got done in 2024

- I made monomorphization kinda work, so I can now write (some) generic functions in Garnet that are impossible to write in Rust. Which I then promptly broke, because…

- I got to the point where monomorph needed more information from the typechecker to work, sighed deeply and decided it was time to Git Gud. So I…

- Bought Types And Programming Languages and read it, which was actually incredibly useful. Should have done that years ago, but years ago I might not have known what I wanted out of it. But it taught me enough to that I…

- Experimented a bunch with different type checker approaches and wrote a functioning proof of concept that theoretically does everything that I want Garnet to do, if not very conveniently. In the process I…

- Made const propagation work pretty much by accident, so I think handling the rest of the properties system shouldn’t be too hard. I just need to convince people (including myself) not to turn it into algebraic effects. As part of that I…

- Refactored some infrastructure for doing code rewrite passes, ’cause I was sick of the recursion-scheme approach. This has made some of the code a lot longer and other parts shorter, but the end result is universally easier to read and more powerful. So now I’m working on…

- Writing New Type Checker #4.

Type checker work

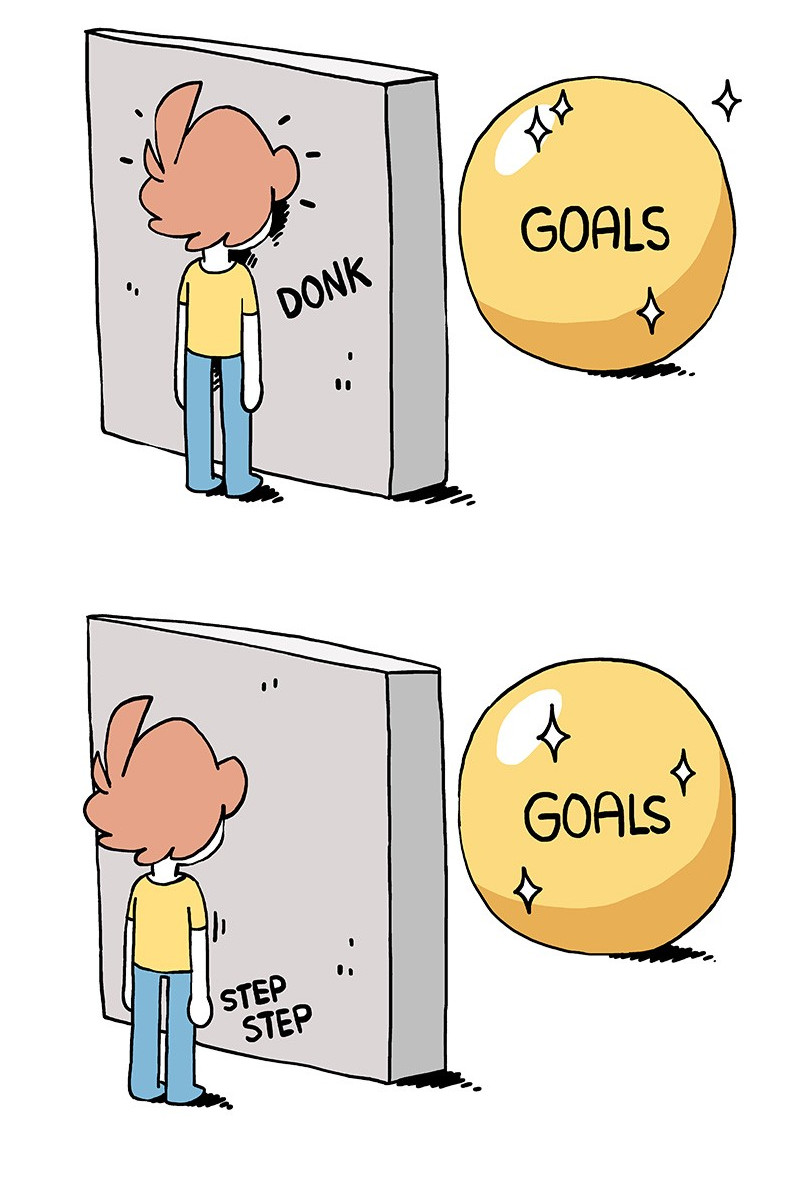

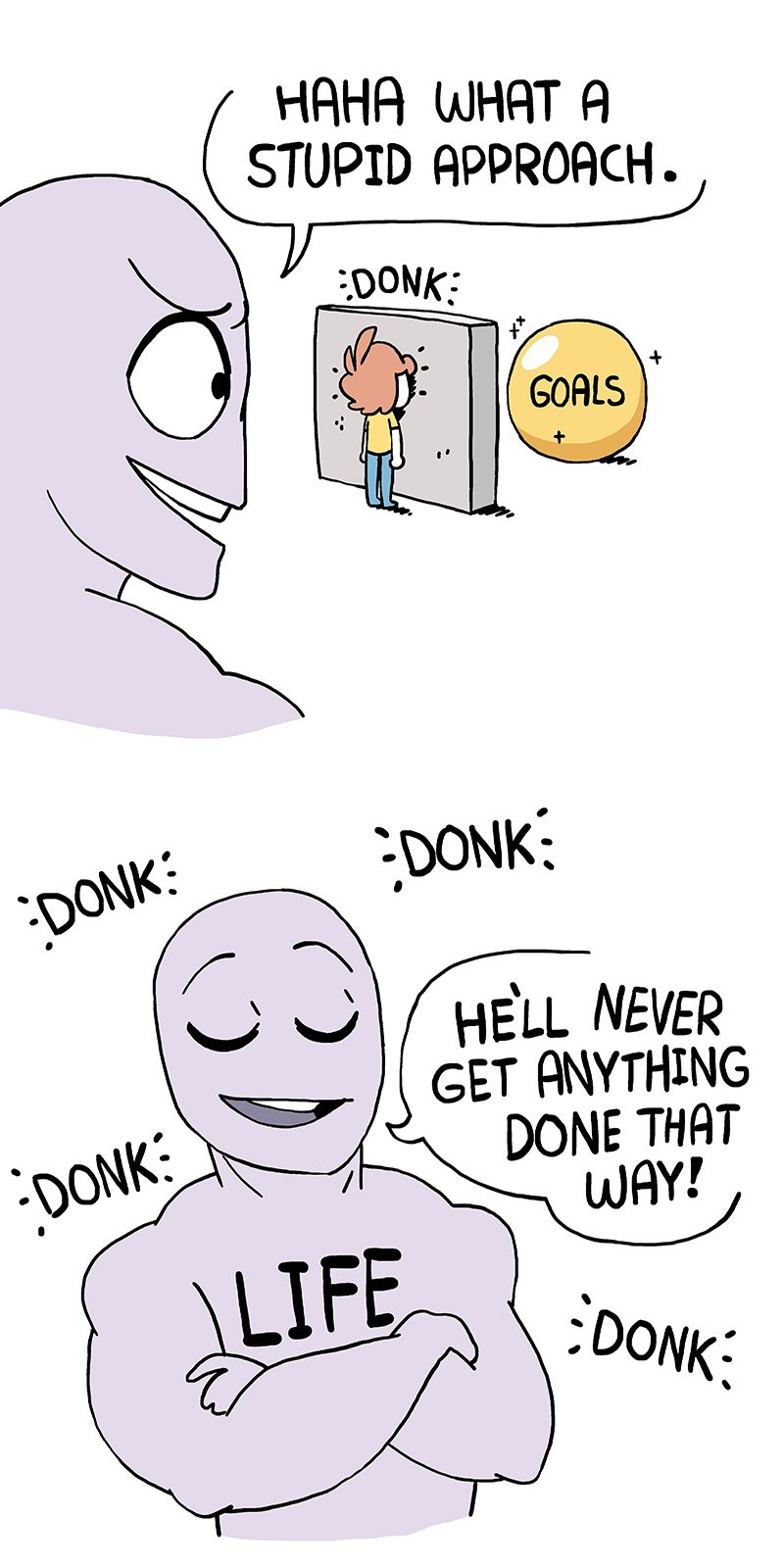

Well, my plan for Garnet’s type system always had a step in it that said “I don’t really know what to do here, so I’ll just do the stupid thing and push it as far as I can until I hit a wall.” Turns out if you do the stupid thing hard enough it leads you into some pretty advanced CS research. Not sure how to do metaprogramming? Just make generic structs full of closures. Not sure how to do fancy type system stuff? Evaluate everything possible at compile-time until you get something concrete. Sick of writing your own inference engine for every little thing? Let’s just try to just use Datalog. It hasn’t been great for Garnet’s goal of “don’t be a research project”, but has certainly been an interesting ride.

The upside of this is that I thiiiiink I know how to do pretty much everything I need to write Garnet now. Up to optimization and code generation, at least. But the backend is stuff I’ve done before; making shitty non-optimizing codegen is pretty easy, and making it less shitty is an incremental process that’s kinda orthogonal to the rest of the language. I guess writing a good borrow checker is still gonna be hard. And frankly you could spend your entire life on a single compiler backend and not be done with it, and people have. But that’s all just A Small Matter Of Programming.

The downside of this is that I’ve spent like… uh, jeez, like three and a half years or something working on type checking. That’s kinda depressing. Whatever window of opportunity Garnet may have had as a useful system programming language is probably closed by now. But I wanna make this thing exist dammit, so finally I just sat down, got some textbooks, and gave myself an undergrad class in type theory. Turns out, it’s not actually that difficult. It is confusing and loopy and recursive and hugely context sensitive and everyone uses a different damn greek letter for everything, but at its heart a lot of it is just layers of lambda calculus. So if you understand lambda calculus, even only conceptually, then type theory is something you can figure out.

So here’s the secret: a type checker is an interpreter.

Again, a type checker is an interpreter. When you

get into the theoretical foundations, types are treated just like values

that are only allowed to occur in certain places in the program, and the

type systems discussed in papers are programming languages descended

from lambda calculus. These programming languages are usually not

Turing-complete, and in real languages it is very heavily mixed up with

the “normal” language, so trying to translate what I already knew into

the stuff type theory lesson and papers talked about was hard as

balls to me. For example in Rust, writing

Vec::<i32>::new() is really two function calls. One

is the “type language” function call Vec::<i32> that

has the signature Type -> Type, and the other is the

::new() function call is defined by the type the first one

returns. But the syntax makes it look like two unrelated things. When

you get into the math, types in type systems work exactly like values do

in normal expressions. You can bind them to variables, pass them to

functions (of the correct signature), hide them in closures, etc. So you

have this lambda-calculus-looking “type language” hanging off certain

parts of your normal program’s “term language”, and as it gets fancier

then figuring out the actual values produced by the type language –aka

checking those declarations against the types actually used in the term

language– looks more and more like interpreting it. So if you can write

a lambda calculus interpreter, you can write a type checker.

A compiler is an interpreter too, of

course. I’d always intended Garnet to lean fairly hard on

compile-time evaluation to one extent or another, but I always felt

kinda dirty about it. But I didn’t need to feel dirty, I should have

just embraced it the whole time. Turns out that when you write code that

generates new types at compile-time, you end up very literally having to

execute it like any other program, and the easy way to do that is with

an interpreter. Zig has done a great job showing that you can just do

this and it mostly Works Fine, if you build the language around it

enough. I’m not sure I’m going to build Garnet explicitly around

comptime evaluation like Zig does, ’cause it sure has some

very real drawbacks as well, but it’s nice to have some confirmation

that it’s not something that is automatically a huge mistake.

Most languages you’ll use in everyday life do their best to paper this over with syntactic sugar and semi-arbitrary limitations. I dunno why; maybe just as a convenient shortcut. Some of the limitations make life easier for the compiler, or maybe it’s just ’cause these languages just didn’t start with this sort of type system and have a hard time retrofitting it onto an already-existing language. I expect Garnet will end up doing a bit papering-over of its own, honestly, but it sure does make it a pain in the ass to learn a programming language and then learn the type theory behind it.

So yeah, my original idea for metaprogramming in Garnet, which went “let’s just make structs and closures and generics and see how far you can push it”? Yeah, you can push it all the way. As I’ve discovered the hard way, you don’t really need a lot of things like Rust’s traits, associated types, or similar concepts. You can write ’em with structs, closures and generics, especially if you get a little creative with your definition of “closure”. But rawdogging the type-level code that way isn’t much fun, so we’re almost certainly gonna make a thin layer of sugar atop it. Work shall continue.

The results of all this suffering

I’m actually totally gonna do higher-kinded types in Garnet, which is

slightly insane but I think will be worth it. You’re never gonna make

anything interesting happen if you never do anything slightly

insane. For my fellow grug-brains, higher-kinded types basically let you

have generic types that take type arguments. So in Rust, which doesn’t

have them, you can write a type Vec<T> where T: Ord

but you can’t write

T1<T2> where T1: VecLikeThing, T2: Ord. Basically

Vec<T> is like a function that takes a type

T, but it’s not a first-class function that you

can slot into other expressions. HKT’s more or less mean that you can

have these type-functions and treat them as first-class values inside

types. There’s three main reasons I want this:

- There’s some nice ideas I want to implement that would benefit from this. I THINK you can implement these things without HKT’s just by hacking around some special cases, but I really don’t want to find out the hard way that you can’t. Rossberg’s 1ML seems like one of those things that is obviously an improvement over the existing state of the art, but as far as I can tell nobody since it was published has just sat down and implemented a non-research language around it. So let’s do that.

- Rust has lots of little places where HKT’s would let you do things that get people confused ’cause you feel like they should be possible, but they aren’t. So adding them will hopefully be useful in multiple places.

- I think that HKT’s will let Garnet do some things I want it to do,

mostly by accident. For example, I’ve always had a stupid

no-idea-how-this-is-gonna-work wishlist item for Garnet that goes

something like “can we please not have

iter(),iter_mut()andinto_iter()all be entirely separate functions you have to write by hand each time?” Turns out you can write a genericiter()function that returns any type of borrow, by being able to write HKT generics over different kinds. So you can replacefn get<'a>(&'a self) -> &'a Tandfn get_mut<'a>(&'a mut self) -> &'a mut Twith a singlefn get<'a, Borrow>(Borrow<'a, Self>) -> Borrow<'a, T>. You can write this right now in Zig, if not exactly nicely, so I’m reasonably sure it’ll work. Will it work well? Let’s find out. - They’re not actually that complicated to typecheck, at least when you have a bare-bones version.

Higher-kinded types also have downsides, of course:

- I don’t know how the hell to implement them. Except I do now! The harder trick is going to be figuring out how to compile them to machine code, I suspect, but if I make Garnet refuse to compile anything that would require runtime evaluation then I think it won’t be too bad.

- Similarly, you kiiiiiiinda can’t monomorphize HKT’s, or so I’ve been told. I suppose I’m gonna find out for real, and probably spend a year banging my head against it, but in general Garnet should be pretty okay doing less monomorph than Rust does. Rust is very hardcore about its monomorph and inlining, and that gives it great runtime performance, but also has real downsides in the language and implementation when it comes down to things like ABI. I think that if HKT’s are used sparingly, then an optimization hazard or two won’t hurt much. You’ll always be able to copypasta some code to rewrite something without HKT’s, after all.

- HKT’s probably suck ass a little for type inference. This doesn’t bother me much, at least so far. I think that requiring type declarations for function signatures will solve a lot of the biggest nuisances, and if HKT’s are used rarely already then it shouldn’t be a huge problem. We’ll see how it feels in practice.

- They’re the feature that Let You Do Monads. IMO monads are not exactly a desirable feature that let you write robust and approachable code. As a card-carrying member of the Rust Evangelism Strike Force, I know a toxic fanbase when I see it, and the amount of ink spilled on peoples’ favorite type system features is downright absurd. So I am simply going to say that there are perfectly good reasons to use monads, and also I don’t intend to have them in the stdlib ’cause you usually don’t need them. Write all the monads you want as third-party libraries, I’ll happily sit back and watch. You can tell me how I’m doing it wrong when you spend four years making your own type checker. Watch, the lobste.rs thread on this article will spawn 30 heated comments about this one statement and about 4 other comments about the entire rest of the article.

References for people interested in this:

- https://smallcultfollowing.com/babysteps/blog/2016/11/02/associated-type-constructors-part-1-basic-concepts-and-introduction/

- https://smallcultfollowing.com/babysteps/blog/2016/11/03/associated-type-constructors-part-2-family-traits/

- https://smallcultfollowing.com/babysteps/blog/2016/11/04/associated-type-constructors-part-3-what-higher-kinded-types-might-look-like/

- https://smallcultfollowing.com/babysteps/blog/2016/11/09/associated-type-constructors-part-4-unifying-atc-and-hkt/

- https://www.reddit.com/r/rust/comments/6seaf8/why_are_higher_kinded_types_requested_in_rust/

- https://users.rust-lang.org/t/lifetimes-on-associated-types/2728/3

Borrow checking

A common question/criticism of Garnet from non-language-nerds is “how are you going to make Rust’s borrow checker less painful?” Easy answer: I’m not.

If you want “Rust’s borrow checker but with a bit more slack”, that’s totally valid, but the only way I know of to add that slack is with more runtime help – a garbage collector or automatic reference counting, a JIT with runtime-analysis, stuff like that. But Garnet isn’t a “do things for you” language, it’s a “do what you tell it to” language. If you don’t need care about the details that much, great! Use a different language. That’s fine. Use the right tool for the job!

I do think that “borrow checker with more slack” a really cool and useful design space that needs exploration. The language that does it really well could become the Java or C# or Go for the next 20 years, in terms of popularity and utility. There’s a few languages exploring this by now, but it’s a tough area that is IMO very under-researched by both academia and industry, so you gotta do a lot of the “figure out what actually works well” yourself just like Rust did. There’s no big pile of theory and best practices lying around just waiting for someone to put together into a nice package. But Garnet just isn’t aimed at that design space. When you need something to do exactly what you tell it to, Garnet will be there waiting for you, smaller than Rust and safer than Zig or Odin.

That said, there’s a fair few rough edges in Rust’s borrow checker that I would like to file down:

- Pattern matching on boxes and other reference-like things

- As described, HKT’s allow one to be generic over reference types, maybe even in a way nice enough to be worth doing

- Better partial borrowing of structures and arrays – this edges towards row polymorphism and dependent types, which I’d kinda prefer to avoid, but it would be nice to tinker with.

Some of those are just ergonomic, some of them involve real improvements in power. But to start with, Garnet’s initial borrow checker is gonna be pretty primitive, ’cause I still don’t reeeeeally know how to write a fancier one. There’s really one key limitation I’m going to to start with: data elements like structs or arrays won’t contain references. I believe this is the same restriction Austral makes, and it is the 90% solution. References mainly get used as arguments to function calls anyway, and since function calls (in the absence of async) always form a DAG, checking for these is relatively easy.

This is conceptually a pretty big restriction, but it’s one that

mostly shows up in very specific places: iterators,

reference-like wrapper types such as Cow, stuff like that.

The aforementioned “borrow checker with some more slack” languages could

make these features special cases or use other constructs

like interior iteration to provide them instead, but that requires a

level of runtime support I want Garnet to avoid. So in the short term

I’ll just hack those features in with unsafe code, and in the long run I

don’t see any good choice for Garnet except to grow a full-featured

Rust-like borrow checker. We’ll see how it goes.

Random compiler engineering lessons

This is probably not too interesting to anyone who isn’t writing a

compiler, so feel free to skip this if you want. For everyone else,

here’s some thoughts I had while reading through the

garnetc code recently and pondering how it was put

together.

First off, having a high-level IR layer between your AST and codegen

is great – these days, the old front-end and back-end division in most

compilers looks a lot more like front-end, middle-end and

back-end. It used to be that the middle-end was small and simple enough

to be considered part of the front-end; it just made sure variables were

valid, did very basic type-checking without generics, and maybe resolved

module imports if you were fancy. By now though it’s a pretty

significant part of many compilers – as I said, type checkers are

basically special-purpose interpreters now. So decoupling that step from

both the parser and the codegen lets you mess with the type-checker or

mess with the language syntax without needing to change much else. While

the front-end builds a syntax tree, and the back-end usually works with

a graph of basic blocks in one way or another, the middle-end often

likes to work on a “lowered syntax tree” of some kind or another. In

garnetc it’s a slightly-cleaned-up AST I just call

hir, in rustc it’s called “MIR” and is lower

level than Garnet’s hir but is still recognizably a tree of

program expressions instead of a list of assembly instructions. As

always, these boundaries are fuzzy and artificial, but what started out

as “I’m going to regret duplicating all this AST stuff aren’t I” has

turned out startlingly pleasant in practice as the compiler grows and

changes.

I didn’t really expect this, in my

functional-programming-language-coded brain, but the visitor

pattern when done right is a really nice way of walking

over a heterogenous tree like an AST. I could write a lot more about it

but the key is the slightly mind-melting interaction between

visit() and walk() functions mentioned in the

link above. Instead of a map() function that has to apply a

function to a node and decide whether and how to recurse on

child nodes, walk() only does the recursion, and

visit() only does the application, and unlike my naive

map() implementation, visit() gets to decide

explicitly when to call walk(). It gives you a sort of

double-dispatch that is very flexible and modular, vs. writing all the

recursion code by hand each time and, in my experience, spending a

lot of time finding the small important differences are in a

swath of near-identical boilerplate. Switching from

map(Expr, Fn(Expr) -> Expr) -> Expr to a visitor has

already solved a couple of bugs. You can write

walk() as higher-order

functions that look more like map(), but you quite

rapidly want multiple versions of the function for different node types,

and if you decide to pack them all into a struct full of callbacks and

default functions it starts looking a whole lot like a visitor anyway.

Writing visitors correctly still isn’t trivial, but it slots a lot more

easily into my brain than a Haskell-style API with deep piles of

combinators. That said, some people (like the blog post previously

linked) do a really good job with the piles-of-combinators approach, so

both are valid.

Punting most of the back-end until later has been a heckin’ great idea. With a new type checker I’m probably gonna rewrite the entire HIR layer, which would have to change what the back-end ingests anyway, so putting a lot of work into it would just cause more work to be fixed up when the HIR changes. And since in the long term I don’t want to use LLVM or compile to C or anything like that, I’m going to end up rewriting the back-end again when I rewrite this in Garnet to bootstrap it. So far, my “it’s stupid but it works” solution of outputting Rust code has worked absurdly well. Which is a shame, ’cause I think codegen is a lot more fun than type-checking, but I did the intelligent thing for once and started this project by tackling the hardest problem.

Unfortunately, writing the compiler in Rust was the right move. The

right move SHOULD have been OCaml. While it’s been ages since I touched

it for real I know OCaml, I love OCaml, and Rust’s control over memory

adds nothing useful to a bootstrap compiler that doesn’t need to be

particularly fast. But I recently read through a bunch of someone else’s

OCaml code, hacked on it some, tinkered around, translated bits of it to

and from Rust, etc, and… hoo boy, actually using Rust is so much

nicer than OCaml. (The caveat of course being that I already know

Rust quite well.) Only like 20% of that niceness is due to the language

itself. I low key hate OCaml’s lack of namespacing for enum and struct

members, but all its other nuisances I pretty much know how to work

around. But holy fuck I do not want to learn to write a

dune package. I do not want to learn Nix just so I can use

dune consistently. I do not want to figure out how to get

OCaml libraries as good as argh or logos or

lang_tester or criterion or log

or codespan-reporting/ariadne. I’m sure it’s

possible, more or less, but I don’t want to bother. Is this laziness?

Well, yes. It absolutely

is. So I just went with what I knew, and it turned out to be the

right choice. I could also have used F# or Haskell; they might be pretty

okay, but Haskell has tooling problems as least as ugly-sounding as

OCaml does. Maybe writing it in F# would have been the better move, I’d

have to Git Gud at F# but it sounds more fun than dune

does. But cargo is right there, sitting around

just working reliably, easily and consistently, pretty much the same way

it did in 2017. The Rust ecosystem feels like it has caused a gigantic

brain-drain of capable toolsmiths from other languages, so I might as

well take advantage of it.

So yeah. If any other language dev nerds want to write a non-trivial compiler and keep it going for years, that’s my wisdom to pass on to them.

Conclusion